Cybersecurity is a discipline with many moving parts. At its core though, it is a tool to help organisations identify, protect, detect, respond, and recover, then adapt to the ever-evolving risks and threats that new technologies, and capabilities that threat actors employ through threat modelling. Sometimes these threats are minor – causing annoyance but no real damage, but sometimes these threats are existential and unpredictable; these are known as Black Swan events.

They represent threats or attacks that fall outside the boundaries of standard threat models, often blindsiding organisations despite rigorous security practices.

In this post, we’ll explore the relationship between cybersecurity threat modelling and Black Swan events, and how to better prepare for the unexpected.

What Are Black Swan Events?

The term Black Swan was popularized by the statistician and risk analyst Nassim Nicholas Taleb. He described Black Swan events as:

- Highly improbable: These events are beyond the scope of regular expectations, and no prior event or data hints at their occurrence.

- Extreme impact: When they do happen, Black Swan events have widespread, often catastrophic, consequences.

- Retrospective rationalization: After these events occur, people tend to rationalize them as being predictable in hindsight, even though they were not foreseen at the time.

In cybersecurity, Black Swan events can be seen as threats or attacks that emerge suddenly from unknown or neglected vectors—such as nation-state actors deploying novel zero-day exploits, or a completely new class of vulnerabilities being discovered in widely used software.

The Limits of Traditional Threat Modelling

Threat modelling is a systematic approach to identifying security risks within a system, application, or network.

It typically involves:

- Identifying assets: What needs protection (e.g., data, services, infrastructure)?

- Defining threats: What could go wrong? Common threats include malware, phishing, denial of service (DoS) attacks, and insider threats.

- Assessing vulnerabilities: How could the threats exploit system weaknesses?

- Evaluating potential impact: How severe would the consequences of an attack be?

- Mitigating risks: What steps can be taken to reduce the likelihood and impact of threats?

While highly effective for many threats, traditional threat modelling is largely based on past experience and known attack methods. It relies on patterns, data, and risk profiles developed from historical analysis. However, Black Swan events, by their nature, evade these models because they represent unknown unknowns—threats that have never been seen before or that arise in ways no one could predict. This is where organisations often encounter significant challenges. Despite extensive security efforts, unknown vulnerabilities, unexpected technological changes, or even human error can expose them to unforeseen, high-impact cyber events.

Real-World Examples of Cybersecurity Black Swan Events

1. The SolarWinds Hack (2020)

The SolarWinds cyberattack, attributed to a nation-state actor, was one of the most devastating and unexpected breaches in recent history. Attackers compromised the software supply chain by embedding malicious code into SolarWinds’ Orion software updates, which were then distributed to thousands of organizations, including U.S. government agencies and Fortune 500 companies.

The sophistication of the attack and the sheer scale of its impact make it a classic Black Swan event. It was a novel approach to cyber espionage, and its implications were far-reaching, affecting critical systems and sensitive data across industries.

2. NotPetya (2018)

The Petya ransomware that launched in 2016 was a standard ransomware tool – designed to encrypt, demand payment and then be decrypted. NotPetya however was something different. It leveraged two changes – the first was that it was changed to not be reversed – once data was encrypted, it could not be recovered; this made it a wiper instead of ransomware. The second was that it also had the ability to leverage the EternalBlue exploit, much like the Wannacry ransomware code that attacked devices worldwide earlier that year – this allowed it to spread rapidly around unpatched Microsoft Windows networks.

NotPetya is believed have infected victims through a compromised piece of Ukrainian tax software called M.E.Doc. This software was extremely widespread throughout Ukrainian businesses, and investigators found that a backdoor in its update system had been present for at least six weeks before NotPetya’s outbreak.

At the time of the outbreak, Russia was still in the throes of conflict with the Ukrainian state, have annexed the Crimean peninsula less than two years prior; and the attack was timed to coincide with Constitution Day, a Ukrainian public holiday commemorating the signing of the post-Soviet Ukrainian constitution. As well as its political significance, the timing also ensured that businesses and authorities would be caught off guard and unable to respond. What the attackers did not consider however was how far spread that software was. Any company local or international who did business in Ukraine likely had a copy of that software. When the attackers struck, they hit multinationals, including the massive shipping company A.P. Møller-Maersk, the Pharmaceutical company Merck, delivery company FedEx, and many others. Aside from crippling these companies, reverberations of the attack were felt in global shipping, and across multiple business sectors.

NotPetya is believed to resulted in more than $10 billion in total damages across the globe, making it one of, if not the, most expensive cyberattack in history to date.

How to Prepare for Cybersecurity Black Swan Events

While it’s impossible to predict or completely prevent Black Swan events, there are steps that organisations can take to enhance their resilience and minimise potential damage:

1. Adopt a Resilience-Based Approach

Rather than solely focusing on known threats, build your cybersecurity strategy around resilience. This means being prepared to rapidly detect, respond to, and recover from attacks, regardless of their origin.

Organisations should prioritise:

- Incident response plans: Have well-documented and tested response procedures in place for any type of security event.

- Redundancy and backups: Ensure critical systems and data have redundant layers and secure backups that can be quickly restored.

- Post-event recovery: Create strategies to mitigate the damage and recover swiftly, minimising long-term business disruption.

2. Encourage Continuous Security Research and Innovation

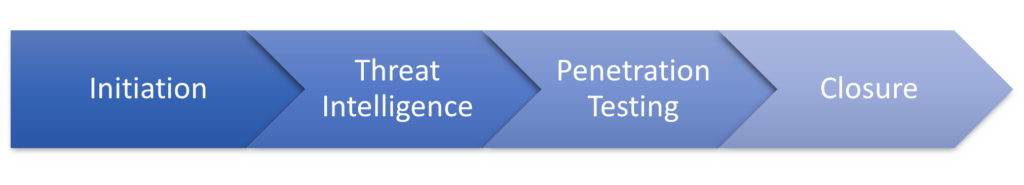

Security Testing: Many Black Swan events are the result of the exploitation of previously unknown vulnerabilities. Investing in continuous security research and vulnerability discovery (through bug bounty programs, penetration testing, etc.) can reduce the number of undiscovered vulnerabilities and improve overall system security.

Defence Engineering: Implement defensive measures such as application isolation, network segmentation, and behaviour monitoring to limit the damage if a zero-day exploit is discovered.

3. Utilize Cyber Threat Intelligence

Staying informed on emerging cybersecurity trends and participating in industry collaborations can give organisations an edge when it comes to detecting potential Black Swan events. By sharing information, organisations can learn from others’ experiences and uncover threats that might not have been apparent within their own systems.

4. Model Chaos and Test the Unthinkable

Chaos engineering, which involves intentionally introducing failures into systems to see how they respond, can be an effective way to test the robustness of an organization’s defences. These drills can help security teams explore what might happen during an unanticipated event and can uncover system weaknesses that might otherwise be overlooked.

5. Promote a Culture of Adaptive Security

Adopting an adaptive security mindset means continuously monitoring the threat landscape, adjusting security controls, and being willing to evolve when necessary. The concept of security-by-design—where security considerations are built into the very foundation of systems and software—will also help organisations stay ahead of new and unforeseen risks.

Black Swan events in cybersecurity may be rare, but their consequences can be catastrophic. The unpredictability of these threats poses a unique challenge, requiring organisations to shift from a purely reactive, known-threat approach to one that emphasises resilience, adaptation, and continuous learning.

Red Team engagements are one tool which can help organisations develop resilient security strategies designed to respond to Black Swans. What makes this possible is some of the key concepts, controls and attitudes which are introduced during the planning stages of the engagement. The results of red team engagements using this approach helps shape boardroom discussions around strategy, resilience, and capacity in a way that allows the business to anticipate Black Swans and be prepared should they ever arrive.

Our Red Team services: https://prisminfosec.com/service/red-teaming-simulated-attack/