Prism Infosec, an established CHECK accredited Penetration Testing company, is pleased to announce that we have achieved accreditation status as a Threat-Led Penetration Testing (TLPT) provider under the CBEST scheme, the Bank of England’s rigorous regulator-led scheme for improving the cyber resiliency of the UK’s financial services, supported by CREST.

This follows our recent accreditation as a STAR-FS Intelligence-led Penetration Testing (ILPT) provider in November 2024, and . These accreditations put us in a very exclusive set of providers in the UK who have demonstrated skills, tradecraft, methodology, and the ability to deliver risk managed complex testing requirements to a set standard required for trusted testing of UK critical financial sector organisations.

Financial Regulated Threat Led Penetration Testing (TLPT) / Red Teaming

The UK is a market leader when it comes to helping organisations improve their resiliency to cyber security threats. This is in part due to the skills, talent, and capabilities of our mature cybersecurity sector developed thanks to accreditation and certification schemes introduced originally by the UK CHECK scheme for UK Government Penetration Testing in the mid-2000s. As the UK matured, new schemes covering more adversarial types of threat simulations began to evolve for additional sectors. Today, across the globe, other schemes have been rolled out to emulate what we in the UK have been delivering for financial markets since 2014 in terms of resiliency testing against cyber security threats. This post examines two of the financial-sector oriented, UK based frameworks – CBEST and STAR-FS, explaining how they work, and how Prism Infosec can support out clients in these engagements.

What is CBEST?

CBEST (originally called Cyber Security Testing Framework but now simply a title rather than an acronym) provides a framework for financial regulators (both the Prudential Regulation Authority (PRA) and Financial Conduct Authority (FCA)) to work with regulated financial firms to evaluate their resilience to a simulated cyber-attack. This enables firms to explore how an attack on the people, processes and technology of a firm’s cyber security controls may be disrupted.

The aim of CBEST is to:

- test a firm’s defences;

- assess its threat intelligence capability; and

- assess its ability to detect and respond to a range of external attackers as well as people on the inside.

Firms use the assessment to plan how they can strengthen their resilience.

The simulated attacks used in CBEST are based on current cyber threats. These include the approach a threat actor may take to attack a firm and how they might exploit a firm’s online information. The important thing to take away from CBEST is that it is not a pass or fail assessment. It is simply a tool to help the organisation evaluate and improve its resilience.

How does CBEST work?

A firm is selected for test under one of the following criteria:

- The firm/FMI is requested by the regulator to undertake a CBEST assessment as part of the supervisory cycle. The list of those requested to undertake a review is agreed by the PRA and FCA on a regular basis in line with any thematic focus and the supervisory strategy.

- The firm/FMI has requested to undertake a CBEST as part of its own cyber resilience programme, when agreed in consultation with the regulator.

- An incident or other events have occurred, which has triggered the regulator to request a CBEST in support of post incident remediation activity and validation, and consultation/agreement has been sought with the regulator.

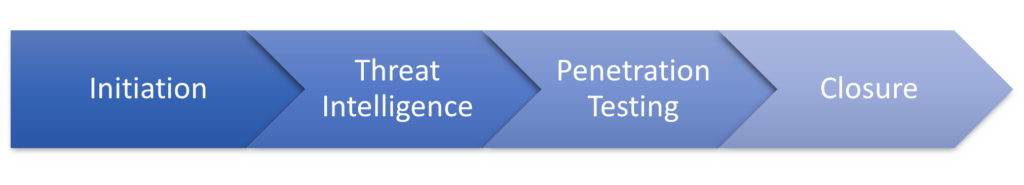

CBEST is broken down into phases, each of which contains a number of activities and deliverables:

When the decision to hold a CBEST is made, the firm is notified in writing that a CBEST should occur by the regulator, and the firm has 40 working days to start the process. This occurs in the Initiation Phase of a CBEST. A Firm will be required to scope the elements of the test, aligned with the implementation guide, before procuring suitably qualified and accredited Threat Intelligence Service Providers (TISP), and Penetration Testing Service Providers (PTSP) – such as Prism Infosec.

After procurement there is a Threat Intelligence Phase, which helps identify information threat actors may gain access to, and what threat actors are likely to conduct attacks. This information is shared with the firm, the regulator and the PTSP and used to develop the scenarios (usually three). A full set of Threat Intelligence reports is the expected output from this phase. After the Penetration Test Phase, the TISP will then conduct a Threat Intelligence Maturity Assessment. This is done after testing is complete to help maintain the secrecy of the testing phase.

The next phase is the Penetration Testing Phase – during this phase each of the scenarios are played out, with suitable risk management controls to evaluate the firm’s ability to detect and respond to the threat. During this phase, the PTSP works closely with the firm’s control group and regular updates are provided to the regulator on progress. After testing, the PTSP then conducts an assessment of the Detection and Response (D&R) capability of the firm. Following these elements, the PTSP will then provide a complete report on the activities they conducted, vulnerabilities thy identified and the firm’s D&R capability.

CBEST then moves into the Closure phase where a remediation plan is created by the firm and discussed with the regulator and debrief activities are carried out between the TISP, PTSP and the regulator.

The CBEST implementation guide can be found here:

CBEST Threat Intelligence-Led Assessments | Bank of England

What is STAR-FS?

Simulated Targeted Attack and Response – Financial Services (STAR-FS)

STAR-FS is a framework for providing Threat Intelligence-led simulated attacks against financial institutions in the UK, overseen by the Prudential Regulation Authority (PRA) and the Financial Conduct Authority (FCA). STAR-FS has less regulatory oversight in comparison to CBEST, but uses the same principles and is intended to be conducted by more organisations than CBEST. Like CBEST, STAR-FS uses the same 4 phase model.

How does STAR-FS work?

STAR-FS has been designed to replicate the rigorous approach defined within the CBEST framework that has been in use since 2015. However, STAR-FS allows for financial institutions to manage the tests themselves whilst still allowing for regulatory reporting. This means that STAR-FS can be self-initiated by a firm as part of their own cyber programme. Self-initiated STAR-FS testing could be recognised as a supervisory assessment if Regulators are notified of the STAR-FS and have the opportunity to input to the scope, and receive the remediation plan at the end of the assessment.

The Regulator, which includes the relevant Supervisory teams, receives the Regulator Summary of the STAR-FS assessment in order to inform their understanding of the Participant’s current position in terms of cyber security and to be confident that risk mitigation activities are being implemented. The Regulator’s responsibilities include receiving and acting upon any immediate notifications of issues that have been identified that would be relevant to their regulatory function. The Regulator will also review the STAR-FS assessment findings in order to inform sector specific thematic reports. Aside from these stipulations, the regulator is not involved in the delivery or monitoring of STAR-FS engagements and does not usually attend the update calls between the firm and TISP and PTSPs.

Like CBEST, there are also Initiation, Threat Intelligence, Penetration Testing and Closure phases, and accredited TI and PT suppliers must be used. In the Initiation and Closure phases, the firm is considered to have the lead role, whilst in the Threat Intelligence and Penetration Testing phases, the TISP and PTSPs are respectively expected to lead those elements. Again, a STAR-FS implementation guide is available to support firms undergoing testing:

STAR-FS UK Implementation Guide

How are we qualified to deliver Threat Led Penetration Testing?

Prism Infosec are one of a small handful of companies in the UK which have met the criteria mandated by the PRA and FCA to deliver STAR-FS and CBEST engagements as a Penetration Testing Service Provider. That mandate is that the provider must have, and ensure that engagements are led by a CCSAM (CREST Certified Simulated Attack Manager) and a CCSAS (CREST Certified Simulated Attack Specialist). Furthermore, the firm must have at least 14,000 hours of penetration testing experience, and the CCSAM and CCSAS must also have 4000 hours of testing financial institutions. The firm must also have demonstrated their skills through delivery of penetration testing services for financial entities which are willing to act as references, and must have been delivered within the last months prior to the application.

How we deliver Threat Led Penetration Testing?

At Prism Infosec we pride ourselves on delivering a risk managed approach to Threat Led Penetration Testing – ensuring we deliver a test that helps us evaluate all the controls in an end-to-end test. Our goal is to help our clients understand and evaluate the risks of a cyber breach in a controlled manner which limits the impact to the business but still permits lessons to be learned and controls to be evaluated. Testing under CBEST, STAR-FS or simply commercial STAR engagements is supposed to help the firm, not hinder it, which is why we ensure our clients are kept fully informed and are able to take risk aware decisions on how best to proceed to get the best results from testing.

Prism Infosec will produce a test plan covering the scenarios, the pre-requisites, the objectives, the rules of the engagement and the contingencies required to support testing. A risk workshop will be held to discuss how risks will be minimised and agree clear communication pathways for the delivery of the engagement.

As each scenario progresses, Prism Infosec’s team will hold daily briefing calls with the client stakeholders to keep them informed, set expectations and answer questions. An out of band communications channel will also be setup to ensure that stakeholders and the consultants can contact each other as necessary, should the need arise. At the end of each week, a weekly update pack outline what has been accomplished, risks identified, contingencies used and vulnerabilities identified will be provided to the stakeholders to ensure that everyone remains fully informed.

Once testing concludes, Prism Infosec would seek to hold a Detection and Risk Assessment (DRA) workshop which comprises of two elements – the first a light touch GRC led discussion with senior stakeholders to provide an evaluation of the business against the NIST 2.0 framework; the second is a more tactical workshop with members of the defence teams to examine specific elements of the engagement. This second workshop is invaluable for defensive teams as it helps them identify blank spots in the defence tooling, and gain understanding to the tactics, techniques and procedures (TTPs) used by Prism Infosec’s consultants.

Following this, Prism Infosec will produce a comprehensive report on how each scenario played out, severity rated vulnerabilities, a summary of the DRA workshops and information on possible improvements to support and assist the defence teams. We will also produce an executive debriefing pack and deliver debriefing tailored to c-suite executives and regulators. We will also provide a redacted version of the report which can be shared with the regulator, as required under CBEST.